GenAI Agents in Drilling Process Orchestration

Automation of wellsite operations

Automation of Wellsite operations is not new, over the last 10 years the industry has seen several innovations in this area both in Drilling and Wireline operations. For example DrillOps has been offering drilling automation and advisory capabilities for some time now, similarly the Neuro Autonomous Well Intervention has enabled automation of Wireline and Coiled Tubing intervention operations. The benefits of automation are not always crew size reduction in many cases we have seen improvements in performance, better adherence to procedures and improved consistency across operations. A human is always in the loop and in many cases the automation systems have to work together with humans to achieve a goal.

Current approaches tend to be somewhat rigid in both the domain the automation can be applied but also the ways the user can interact and customize the behavior of the system. Every rig tends to have its own unique characteristics and rig operators have their own unique standard operating procedures. Even within an operator, those procedures do vary from well to well.

What if it was possible to customize an automation system through natural language procedures and instructions? What if you could simply interact and direct that system through a natural language interface?

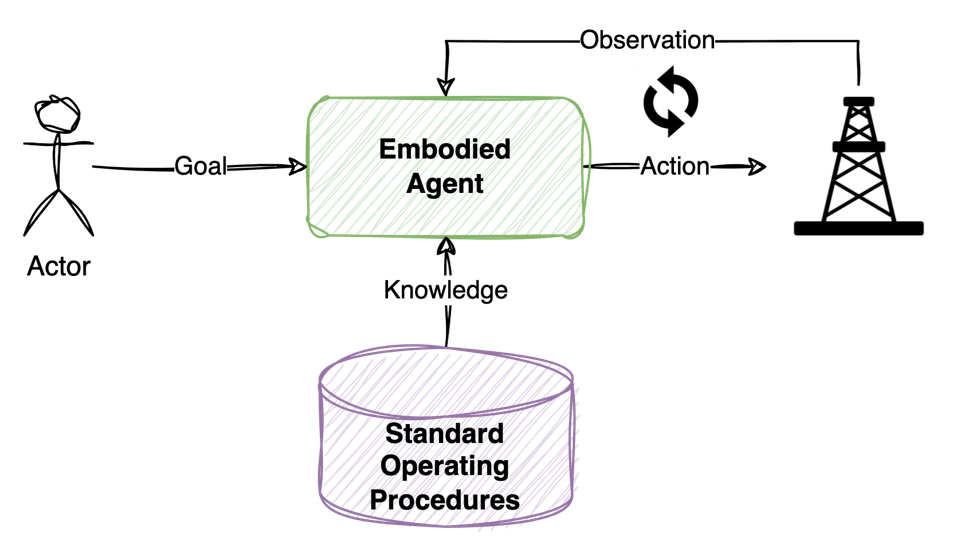

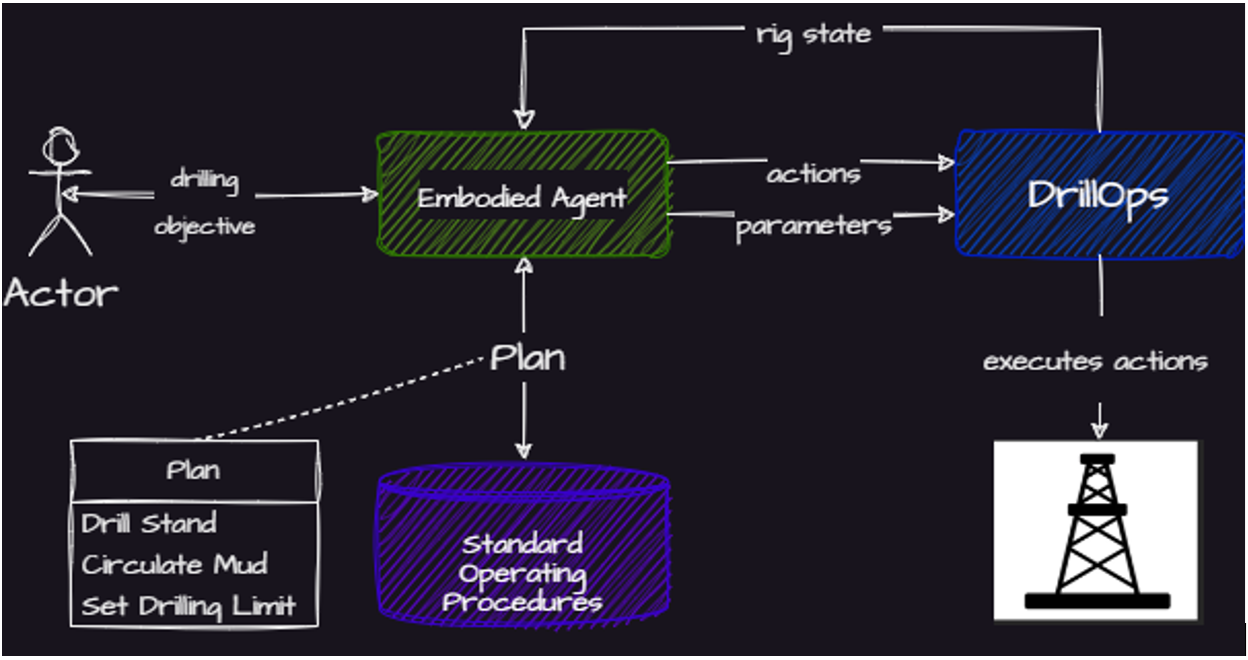

The emergence of large language foundational models (LLM) has made this a reality. LLMs are typically used as conversational chatbots, however the underlying technology could be used to engineer an agent that is capable of understanding natural language procedures and user goals. The agent can reason to generate compliant plans and finally taking physical actions that enable the agent to accomplish a goal given by a human. This interaction between a large language model and the environment is what is typically referred to as a GenAI enabled Embodied Agent.

In its simplest form the agent receives a goal from the user in natural language (e.g. “Drill to 5000 ft, after 2000 ft increase the WOB by 20% also if the ROP drops below 50 ft/hr increase the WOB by a further 10%”), then the agent will use any knowledge provided (procedures and material written in natural language) to generate a plan that complies with the provided practices. It will then select actions that need to be taken to execute that plan, while monitoring the state of the environment to ensure everything goes as expected. If the observation indicates a non-compliance compared to what was expected, then the agent will revise its plan while always complying with the provided procedures. The agent repeats this loop without requiring additional input from the user, although the user could interact with agent at any time through natural language.

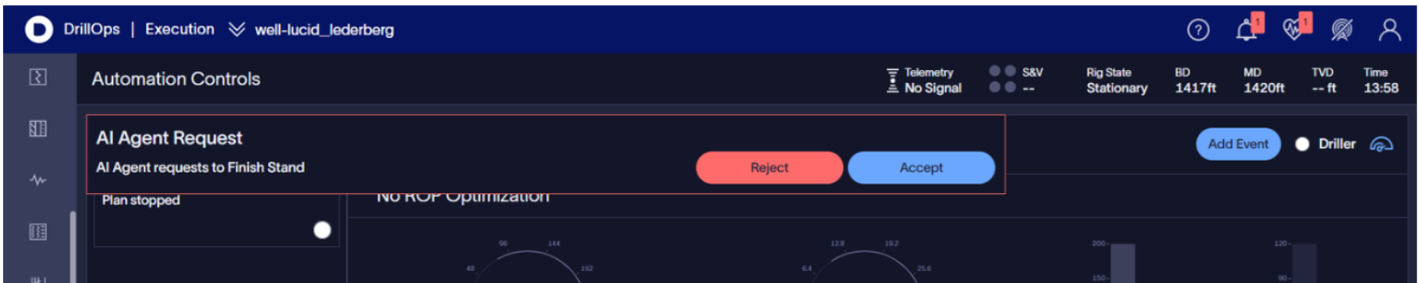

Actions could be dispatched to a traditional control or automation system but in many cases those actions need to be executed by a human. The agent will prompt the human to take the action while observing the environment to ensure the action is taken as instructed. Any deviations from procedures can be detected and a risk analysis could be provided to the human.

What makes embodied agents different that the typical chatbot use of LLMs, is that the agent will iterate through multiple reason, act, observe iterations until the goal it met. An embodied agent does that by interacting with the environment.

The key problem this approach is trying to solve is to simplify the process of taking knowledge and translating it to actions. The traditional process automation methodology involves an expert understanding the domain (by reading documents and talking to expert users) and trying to convert that knowledge to an algorithm that can be given to a computer to execute. This is a very complex and time-consuming process and can't be easily customized for each rig and operator.

Now how one uses a foundational LLM that is not really designed to control Wellsite equipment nor has the knowledge to do so?

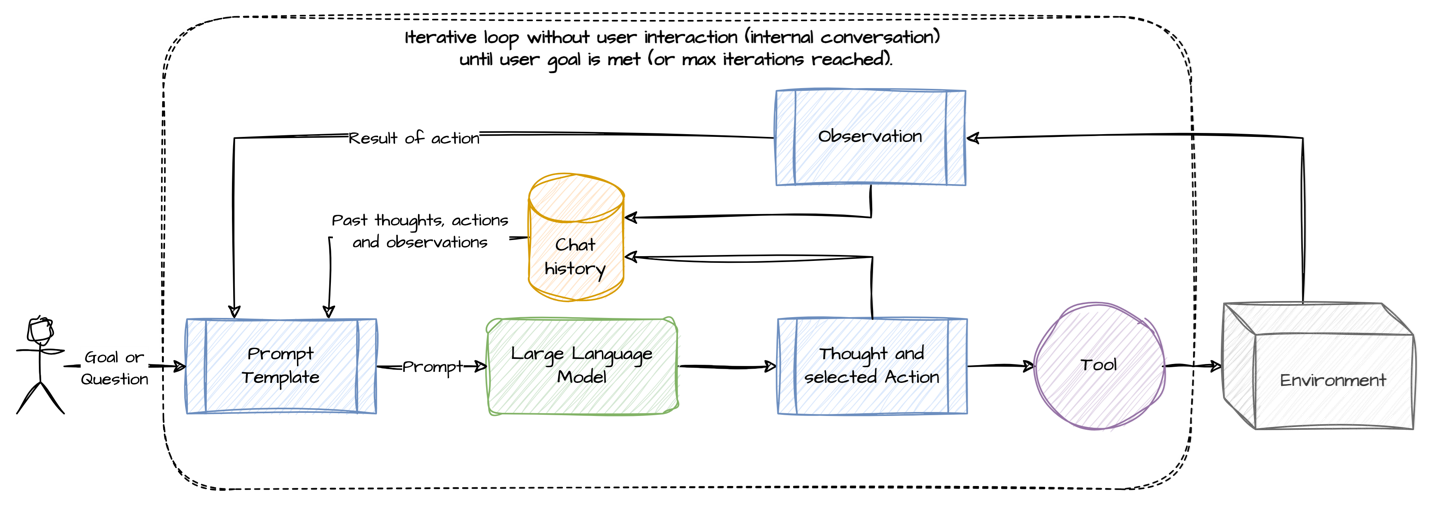

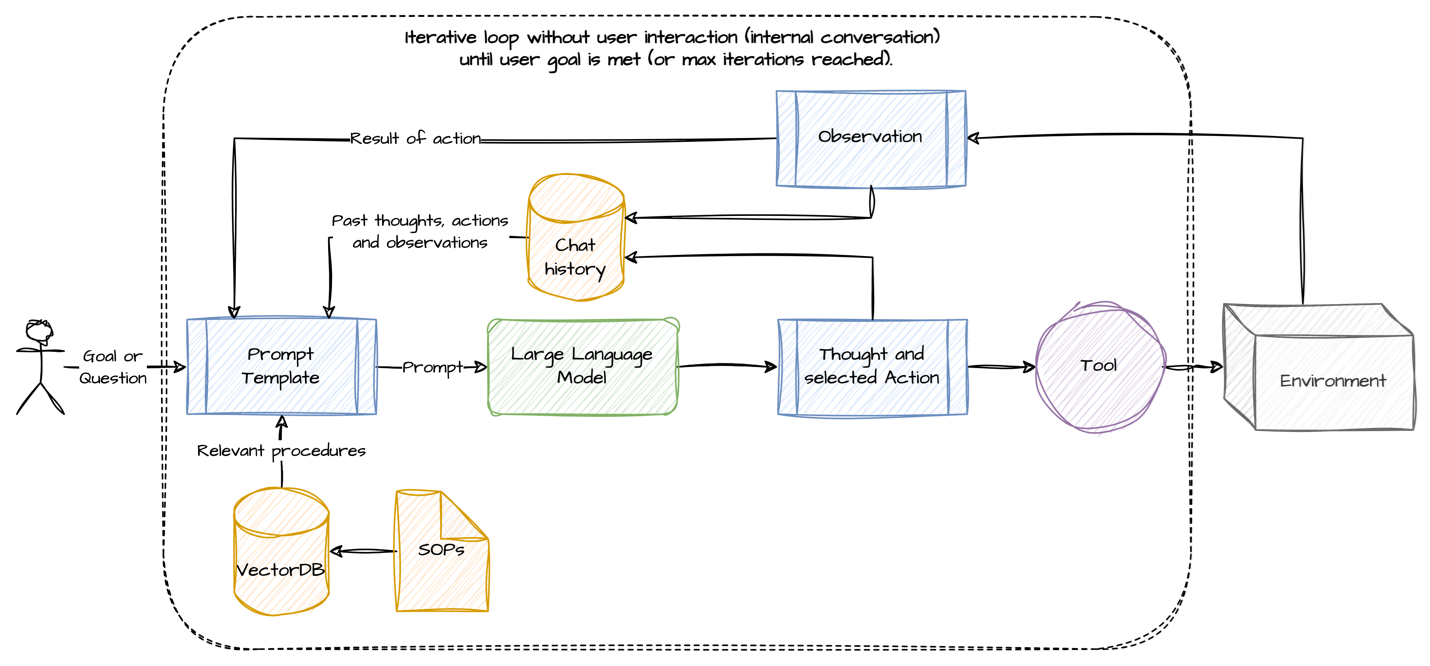

The answer to this is using that LLM to build an agentic workflow around it. Several papers have been written that explain how a foundational model could be instructed to reason, plan and act. Those approaches typically involve asking the LLM to plan ahead in order to reach a certain goal, as well as providing the LLM a collection of actions that it can take to execute than plan (and hence achieve the goal). After an action is taken, an observation of the environment is made and given the LLM. This helps give real-time feedback to the LLM so that it can decide if the current plan is progressing or stalled. The LLM is invoked iteratively, using this plan, act and observe loop until the goal is reached.

This approach works remarkably well, however this is one important piece missing, domain specific knowledge. The state-of-the-art models have very limited knowledge on how to drill a well, in addition any knowledge they may have might not be up to the operating practices of a given operator. This problem can be solved by dynamically injecting relevant content to the LLM on each plan, act, observe iteration. There are already established patterns to achieve this, with the most popular being Retrieval Augmented Generation (RAG). A Database is utilized to store the procedures that contain the necessary information on how to drill a well. Those procedures could be documents and training material that already exists for human operators. The database in indexed using what is referred to as embedding vectors. The embeddings are vectors of the semantic meaning of those documents that the LLM can use to retrieve the content that is most relevant to the problem it is trying to solve.

So, for example, if the goal given to the agent was to “Drill until a depth of 10,000 ft is reached”, then the agent will retrieve information related to drilling a stand, adding a stand, how to set drilling parameters and other best practices related to the drilling process. That content is injected to the prompt, and this is what makes a general purpose LLM follow domain specific procedures.

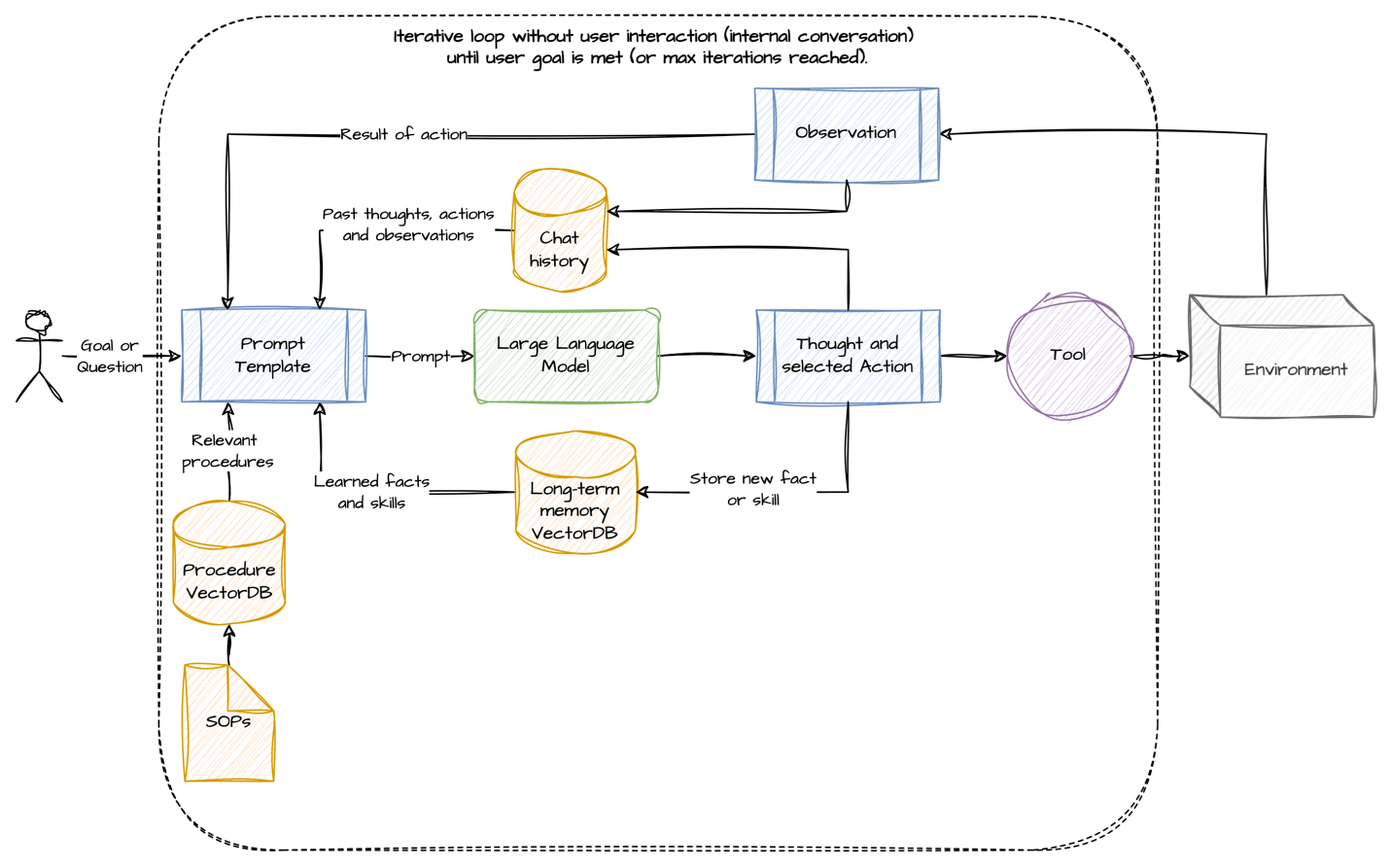

What exactly are the actions and how does the agent use them? Actions could be as simple as commands to a rig control system or timely recommendations to a human to complete a task, in most cases a combination of the two. An enhancement to using actions as simple commands, is to give the agent a sandboxed environment where it can execute code that it generates as the action. This gives the agent a lot of flexibility since instead of triggering actions, it can create more complex sequences and loops to achieve a specific goal. An additional advantage of using code as actions, is that it is now possible for the agent to build code routines that can be reused to solve similar problems in the future.

And this leads to the final piece of the agent, long-term memory. Sometimes not everything the agent needs is included in the procedures and this may lead to the agent creating a plan that is not up to the standards of the user. In those cases, the user can intervene and provide additional directions. The agent can adjust the plan to comply with those directions and will create a ‘fact’ in its long-term memory that can be recalled in the future.

The long-term memory is also used by the agent to store ‘code recipes’ that have successfully worked to accomplish a goal. Those learned skills can then be recalled so that similar tasks can be accomplished faster and more consistently.

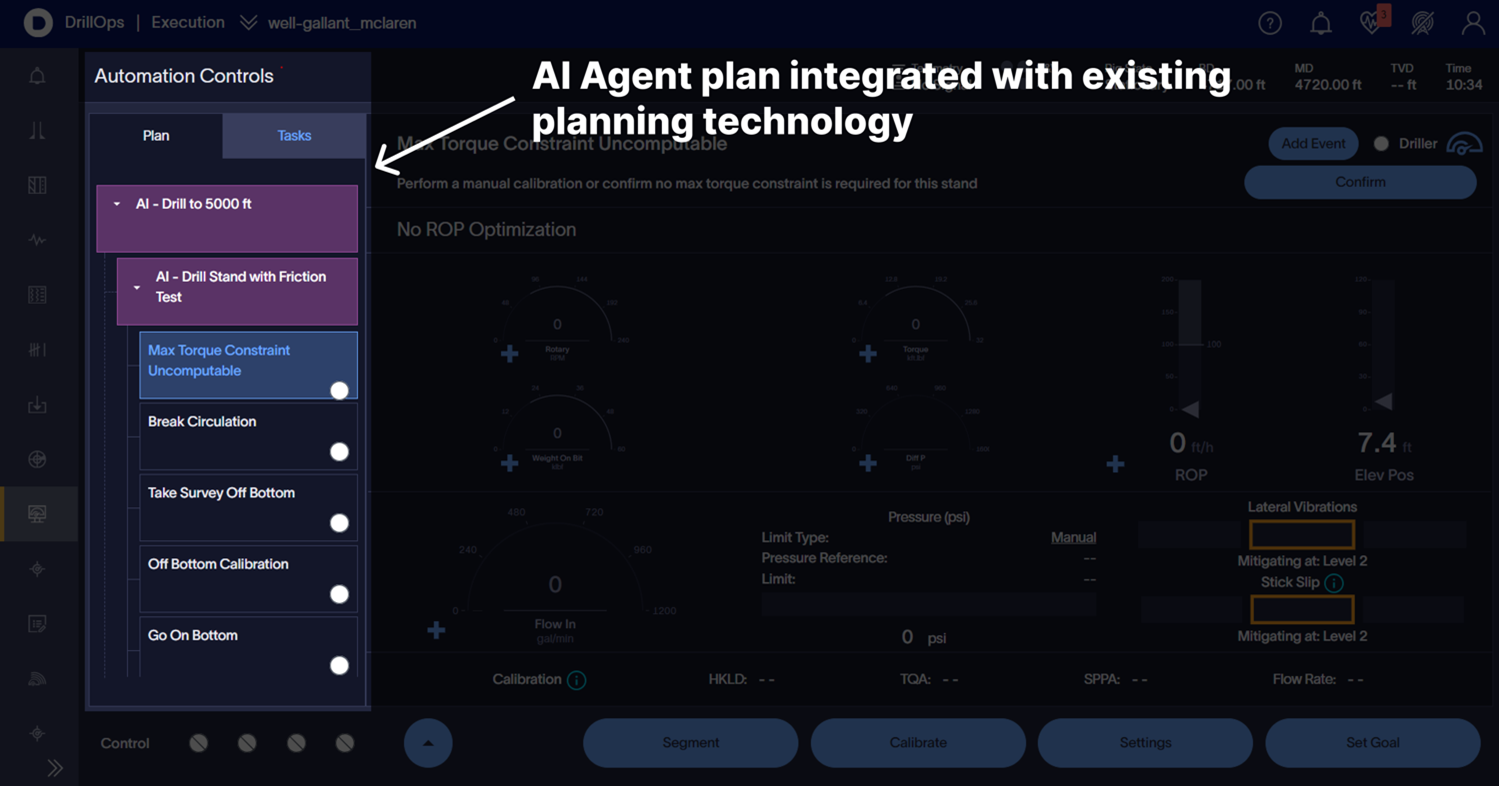

To evaluate the concepts explained above, a demonstrator application was built by integrating the AI Agent with the DrillOps Automate platform. This AI Agent can generate and execute complex plans, fill gaps in the plan, and take specific actions defined by the user based on user defined conditions. The embodied agent was given knowledge about drilling a well and the ability to send requests for various procedures to DrillOps. We integrated the plan generated by the embodied agent into the hierarchical AI Planning domain used by DrillOps Automate.

Here is an example prompt:

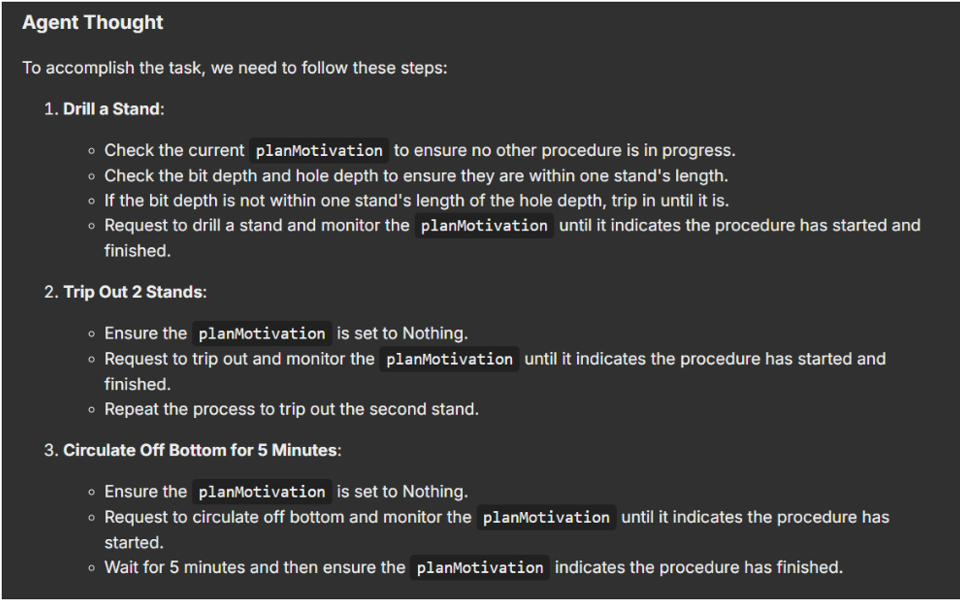

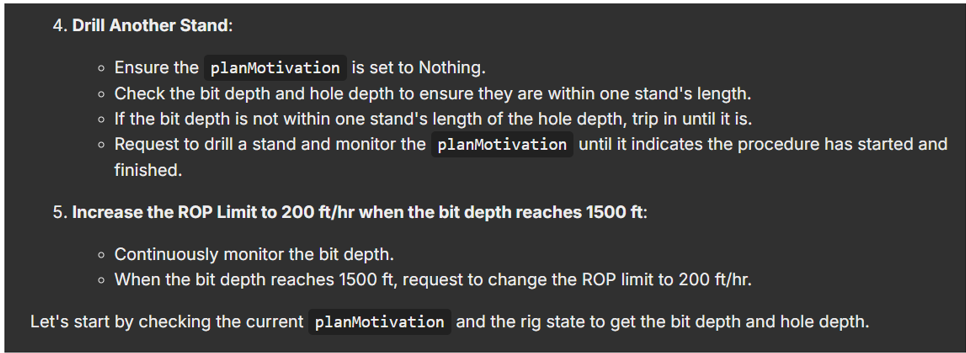

“Drill a stand, then trip out 2 stands, Circulate Off bottom for 5 minutes, drill another stand and increase the ROP (Rate of penetration) limit to 200 ft/hr when you get to 1500 ft”

The AI Agent processes this request and generates a plan:

Next, the AI agent will execute the plan by sending requests to DrillOps. The agent will then monitor DrillOps to track progress and make any necessary adjustments to ensure successful execution:

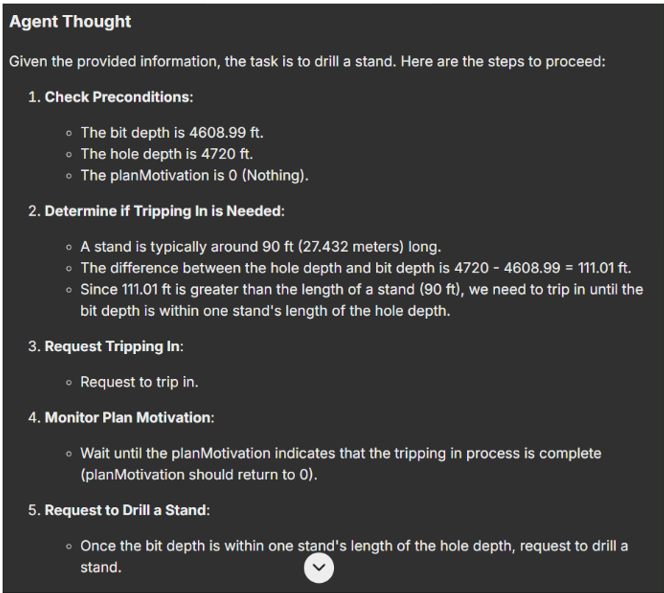

Here is an example of how the agent fills gaps in a requested plan. We asked the agent to drill, but the bit is not in range to drill, so the agent reasons that we need to trip in before we start drilling:

By leveraging the dynamic planning and reasoning capabilities of the embodied agent, we were able to successfully automate the construction of a well, allowing for a wide variety of custom procedures. We could trip to certain depths, change how we hole clean, perform friction tests, set drilling parameters based on observed rig state, all based on dynamic user input. This agent adds a level of customizability and performance to drilling automation that has never been seen in the industry.

Agentic workflows will change the way humans interact with machines at the wellsite, the rigid automation systems we use today, will be much easier to customize in the future. In addition, even when direct equipment control is not available, the agent can still monitor the drilling process and interact with the human in natural language, to provide useful insights and instructions on how to safely complete a goal.